In one of the recent blog entries we talked about how Bing Visual Search lets users search for images similar to an individual object manually marked in a given image (e.g. search for a purse shown in an image of your favorite celebrity). Since that post we took the functionality one step further: with our new Object Detection feature you don't have to draw the boxes manually anymore, Bing will do that for you.

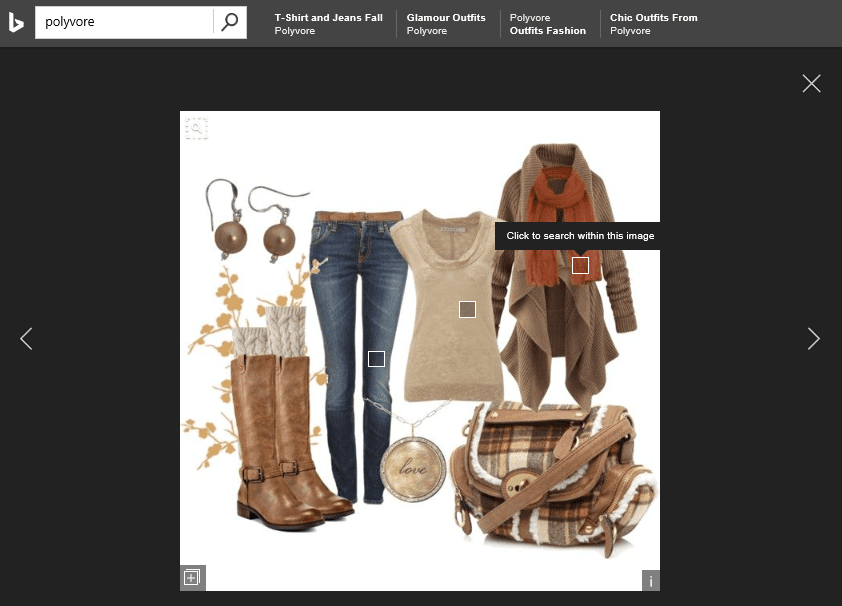

Going back to our main scenario, imagine you're looking for outfit inspiration and you ended up in Bing Image Details Page looking at an interesting set. Bing automatically detects several objects and marks them, so you don't have to fiddle with the bounding box anymore. See below and note the 3 hotspots with light grey background and white boundary.

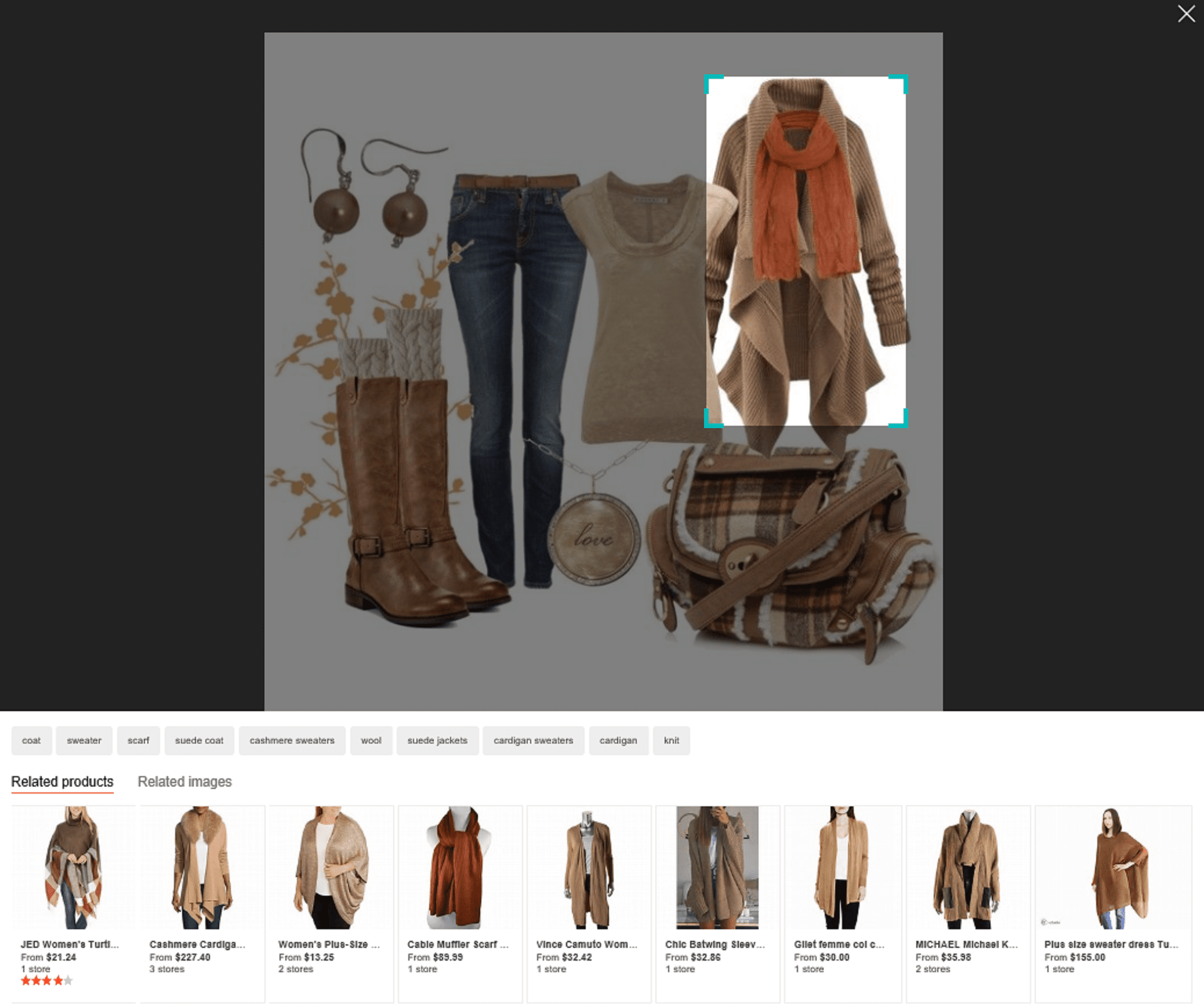

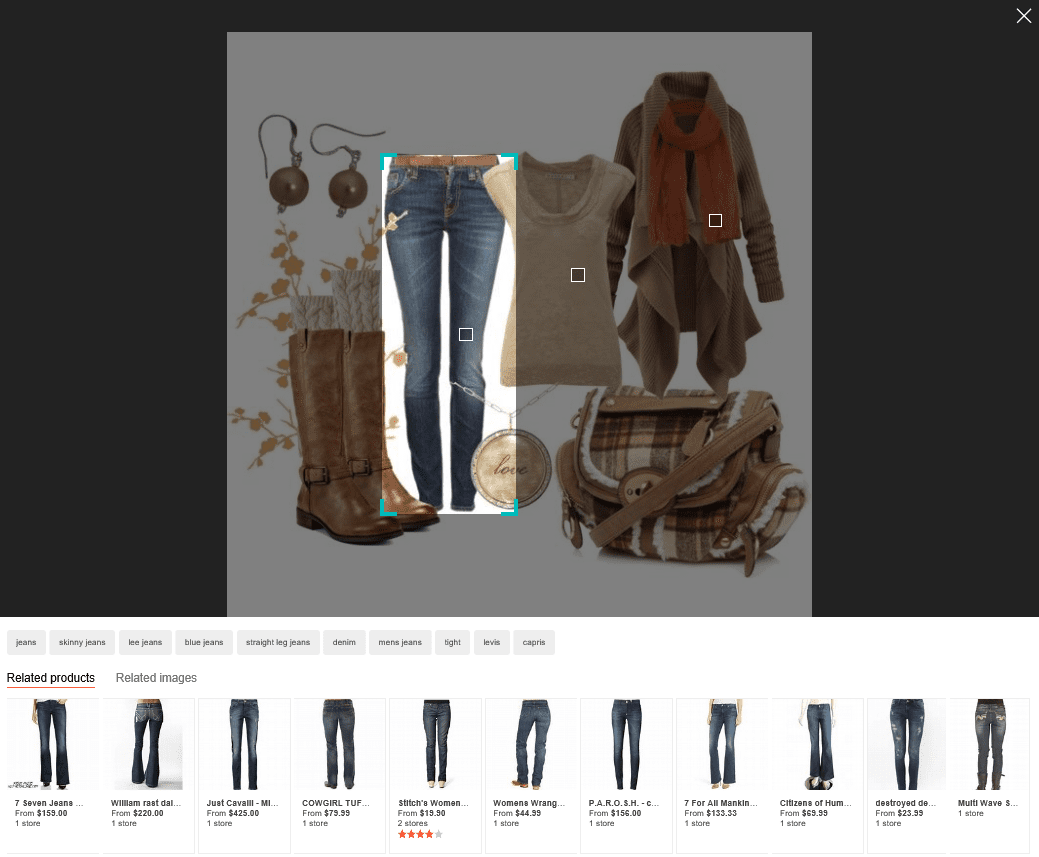

Now if you just click on the hotspot over an object of interest, Bing will automatically position the bounding box in the right place for that object and trigger a search, showing its results in Related Products and Related Images sections of the page.

To explore more, you can now adjust the box or try clicking on the other hotspots.

Here’s also a short video showing a live demo of Visual Search and Object Detection:

[video=youtube;-w803it0LVU]https://www.youtube.com/watch?v=-w803it0LVU[/video]

We invite you to play around with this functionality but note that this is still an early version with limited coverage, currently only targeting certain categories of the fashion segment.

You are probably wondering how Object Detection was made possible, and that's what we cover in the next paragraphs.

Object Detection Model

The core of the underlying solution is the Object Detection Model. The goal of Object Detection is to find and identify objects in an image. We not only want to determine the category of the object that got detected but also its precise location and area occupied within the frame.

To get started we needed to define a set of object categories we would support. Based on user activity on Bing we noticed that fashion related searches were quite popular among our users. Thus, we decided to use that as an initial target. Having collected the training data, we got to the next step: training our models. Out of several DNN based object detection frameworks we evaluated for speed and accuracy balance, we selected the popular Faster R-CNN as the one best fitting our needs.

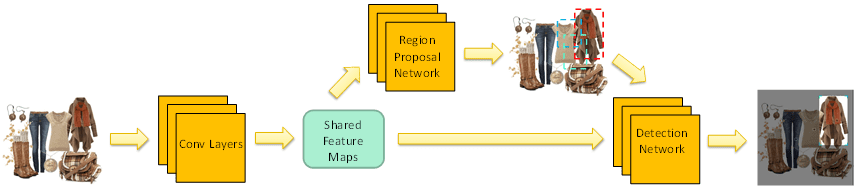

One crucial characteristic shared by most object detection algorithms is generation of category-independent region hypotheses for recognition, or “region proposals”. As compared to other frameworks where region proposals are generated offline, Faster R-CNN speeds the process up significantly enough for it to be done online. It achieves this by creatively sharing the full-image convolutional features between Region Proposal Network (RPN) and the detection network. Overall the structure of the Faster R-CNN network for object detection is as follows:

Product Deployment

Once we finalized the model structure we needed to work out the details of deployment. Given that Bing serves billions of users and that we show the results of object detection for every view of the detail page, providing smooth user experience under all conditions at sensible operational cost was no small challenge. Running the Faster R-CNN object detection using standard hardware was taking about 1.5 seconds per image. This was clearly not a workable solution.

The most obvious options to remedy the situation such as using asynchronous calls or offline pre-processing were not practical in this case: even with asynchronous initiation of the detection the user would still likely suffer a noticeable delay before the calculation completed. On the other hand pre-processing everything offline for the billions of images in Bing constantly changing index would take a really long time.

Luckily for us our partners at Azure were just testing new Azure NVIDIA GPU instances. We measured that the new Azure instances running NVIDIA cards accelerated the inference on detection network by 3x! Additionally, analyzing traffic patterns, we determined that a caching layer could help things even further. Our Microsoft Research friends had just the right tool for the job: a fast, scalable key-value store called ObjectStore. With the cache to store the results of object detection in place we were not only able to further decrease the latency but also save 75% of GPU cost.

Another way Azure helped us increase efficiency was through its elastic auto scaling. We always design our services to provide smooth experience even at peak loads. Such loads however don't occur very often. Traffic also fluctuates significantly between different hours of the day as well as between weekdays and weekends. Thanks to Azure Service Fabric, Microsoft's micro-service framework which we used to implement our Object Detection feature, we managed to make it reliable, scalable and cost efficient.

Ultimately with Azure, ObjectStore, and NVIDIA technology we built an object detection system which can handle full Bing index worth of data under peak traffic, provide great experience to users while making cost-effective use of resources.

What's Next?

We are constantly working on improving the precision and recall of the model by experimenting with even more sophisticated networks. We are also working on scaling up beyond the initial fashion categories, as well as expanding to other domains.

Please note that Object Detection is currently only available on the desktop with the mobile support still in the works. It is expected to be released for mobile in coming months.

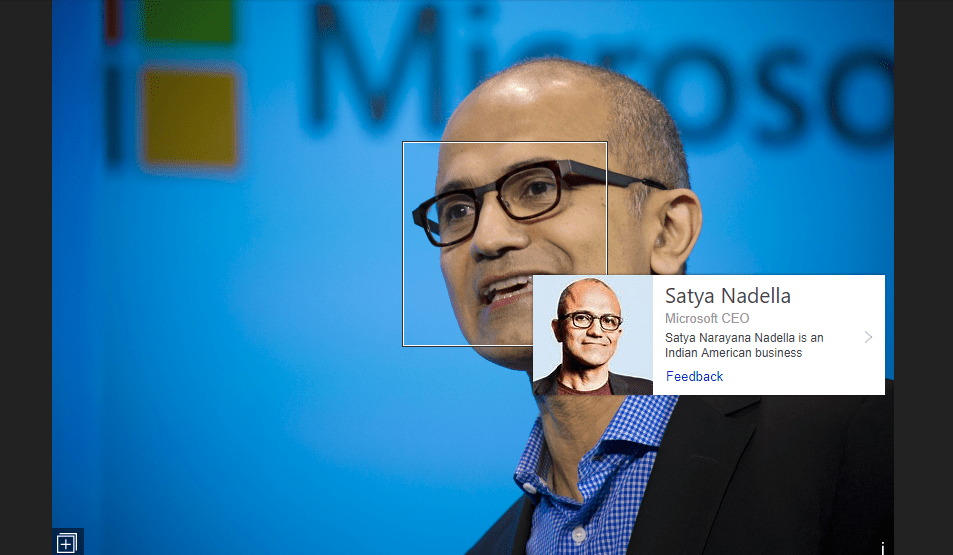

Finally, note that Bing also supports Celebrity Recognition which to the user may look somewhat similar:

Celebrity Recognition however is based on a Face Detection Model which is different from the Object Detection Model discussed in this article, and it will be covered in one of the upcoming posts.

We hope that Bing Visual Search with Object Detection will make your visual explorations so much more enjoyable, streamlined and fruitful. Stay tuned for more improvements, and do let us know what you think using Bing Listens or the Feedback button on Bing!

Source: Object Detection for Visual Search in Bing | Search Quality Insights